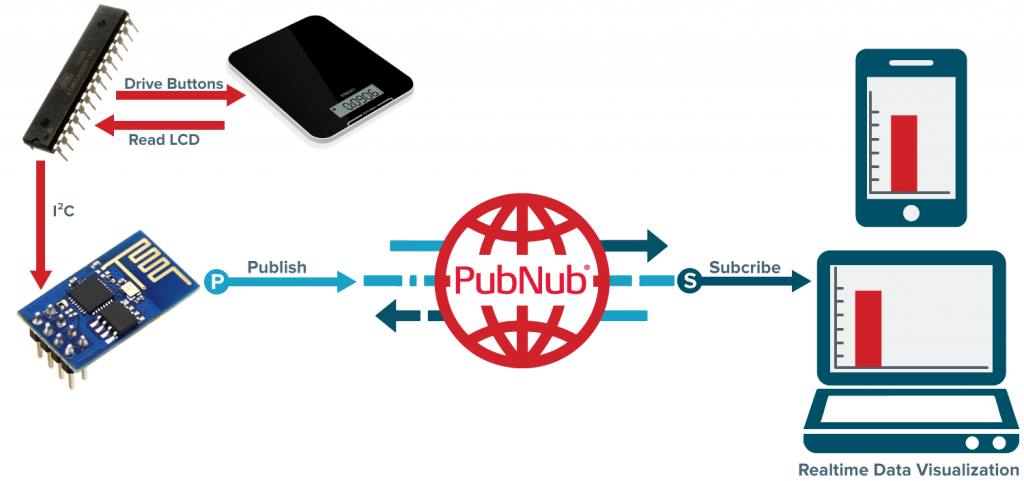

Earlier this year, we demonstrated the power of real-time data streaming and the Internet of Things with our connected coffee maker, an application that tracks the volume of coffee left in the pot and streams the data to a live-updating dashboard in real time. This project showed how easily data can be published from a remote sensor node (in this case, a digital scale) using PubNub, and the value of being able to subscribe to that information from any other device in the world (in this case, a real-time dashboard).

In case you missed it …

- Take a look at the original project.

- Browse through the source code repository.

- Check out the live UI in action.

In this post, we're beefing up our IoT coffee maker and adding a new feature to our web-based dashboard UI – Storage and Playback (also known as our history API). This feature can be used to get previously published messages, even limiting how many messages are received and from what time frame they were published.

Why Use Storage & Playback?

The original dashboard UI worked great, but there was one major flaw. A publish event from the scale only occurs when new information is available, i.e., a change in the weight of the object on the scale; however, a timer also exists to ensure there is at least one publish event every two minutes to keep the connection alive.

This means that when first loaded, the web page could take up to two minutes to display any data on the graph as it waits for information to be published. This is certainly not desirable, as it can lead to the belief that scale is not working correctly. After all, our IoT application needs to be real-time.

There are numerous ways to solve this issue with some being better than others:

1. The web page could display a default value when it first loads.

This would work, but it is far from ideal. A user might interpret this initial level as the actual current level – after all, this is real-time data we are talking about.

2. The scale could publish data much more often.

While this approach might seem appealing to some, it is a bad design approach. Publishing more often then necessary will waste power on the remote device and clog the channel with redundant information. This solution also requires an update to the remote device firmware, and this is not always feasible or, at least, easy.

3. The remote device could use PubNub Presence, and publish on any new “join” events.

This solution is better than the first two, but it still requires a firmware upgrade on the remote device. It also gives the scale more work to do, as an unknown number of devices could join or leave the channel at any given time. The only major advantage to this approach is that the displayed information will always be in real time.

4. The web page can use PubNub Storage and Playback to retrieve the last published message.

Out of all the solutions presented, this seems to be the most ideal. It puts the extra work on the web page as opposed to the remote device, and doesn’t require any firmware updates. If no new data has been received, the last published message will still include relevant information, and any changes on the scale side will result an immediate update with a new publish event anyway.

Adding Storage & Playback

One of the great things about PubNub is the ability add additional features to your account. To enable Storage & Playback, login to the PubNub Admin Portal, and click ADD for Storage and Playback in the Features section.

The next step is deciding how to use Storage and Playback to our advantage. Recall that we are using the EON JavaScript framework to display the coffee pot level data in a graph. There is a nice optional parameter connect to the eon.chart() method. This parameter can be used to specify a callback function which executes when PubNub first makes a connection. This is exactly what we need – a function call triggered when the page is first loaded! Let’s tell EON to call a function historyCall when it first connects.

Now we just need to define a function named historyCall(), but what should it actually do? There should definitely be a way to retrieve the last published method, but what if that message is really old? Since it is known that there will always be at least one publish event every two minutes, we really only care about the last few minutes of history. If no messages exist in that timespan there is either something wrong with the scale or it has lost power.

Great news: Storage and Playback can handle all of this for us!

Using the History API

Since we are using JavaScript in the web page, a quick look at the PubNub JavaScript API reference is in order. There are numerous parameters which can be used with pubnub.history(), but we only care about a few of them:

Since we are using JavaScript in the web page, a quick look at the PubNub JavaScript API reference is in order. There are numerous parameters which can be used with pubnub.history(), but we only care about a few of them:

- callback – What to do on success.

- channel – Channel which owns the desired history.

- count – How many messages should be returned.

- end – Time token delimiting the end of the history time slice.

- reverse – Specify the order of returned messages.

Specifying the start parameter without end will return messages older than and up to the start time token. Specifying end without start will return messages matching the end time token and newer. This can seem counter-intuitive at first, so be careful!

The next step is generating the end time token. A safe time slice to use is the past five minutes, so we need to get the current time, subtract five minutes from it, and convert that value into a PubNub time token. When used with modern browsers, JavaScript offers the static method Date.now() which will return the current Unix Time in milliseconds.

This is easily converted to seconds and set to a time five minutes into the past by first dividing by 1000 and then subtracting 300 seconds.

Finally, converting to a PubNub time token can be done by multiplying this value by 10,000,000.

FUN FACT: Unix time is a standard used in many systems. It is the number of seconds elapsed between the current time and the zero hour of UTC (Coordinated Universal Time), which took place Thursday, January 1, 1970. Converting between the current date and Unix time is straight forward, but there are numerous calculators available for use.

Finally, we want to check if any messages exist in that time slice. The history call response payload will contain a JSON array of three objects. The first object is a JSON array of published messages, while the second and third are the start and end time tokens, respectively.

If no messages are returned in the payload, the time tokens will be zero. A simple check against this should suffice in determining if any messages exist. If a message is found in the history time slice, it is republished on the channel; otherwise, a message is displayed on the web page to alert users of a possible scale error.

Even though only the last couple of minutes of published history are important, setting the time slice to a slightly larger range will account for any discrepancies in time from one machine to another. During testing, I found out that my computer was actually returning a time 71 seconds in the past!

Wrapping Up

Putting all of this together, we end up with a much more functional website with positive changes to user interaction and absolutely no modification to the remote device firmware or operation. Adding an on connect callback to the EON graph was super easy, and using the Storage and Playback API was the perfect solution to our problem. Now, there is no delay in the data displayed by the website, and there is a user alert if no recent data has been published.

PubNub provides many different tools for the job, but it’s up to you to decide when and why to use them! Remember to check out the original project as well as the modified HTML code on the gh-pages branch of the project repository.